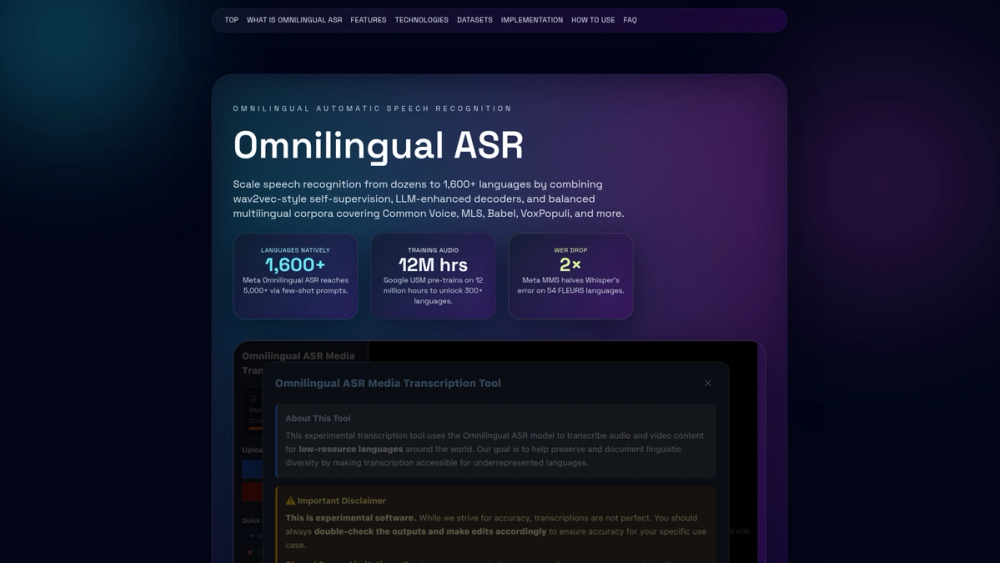

Omnilingual ASR is an advanced AI-powered system that unifies speech recognition across more than 1,600 languages. It leverages language-agnostic acoustic patterns and large language model (LLM) enhanced decoders to provide a single, powerful model for transcribing and translating audio from a vast array of languages, including many low-resource ones.

The primary benefit is its incredible scale, allowing developers, businesses, and researchers to deploy a single solution for multilingual speech-to-text needs, significantly reducing operational costs compared to managing individual models for each language. It's designed for global companies needing call analytics, content creators requiring universal captioning, and communities seeking to bring speech technology to underrepresented languages. Omnilingual ASR's value lies in its democratizing effect on speech technology. By combining self-supervised learning with minimal fine-tuning data, it makes advanced speech recognition accessible to low-resource language communities and enables the creation of truly global, cross-lingual applications.

Features

- Unified Multilingual Model: Supports over 1,600 languages within a single deployment, eliminating the complexity and cost of maintaining separate models for each language.

- LLM-Enhanced Decoders: Utilizes transformer decoders fine-tuned as language models to convert acoustic patterns into grammatically correct and contextually rich text, also managing translation tasks.

- Few-Shot Extensibility: Can be extended to support new languages (up to 5,000+) with just a few audio samples, enabling community-driven expansion for even the most underrepresented tongues.

- Integrated Language Identification (LID): Automatically detects the language being spoken from a pool of thousands, allowing it to seamlessly process mixed-language audio without manual routing.

- Balanced Training for Equity: Employs advanced sampling strategies to oversample data from low-resource languages, narrowing the accuracy gap (Word Error Rate) between them and high-resource languages like English.

- Deployment Flexibility: Available as open-source checkpoints (like Whisper and MMS) for custom hosting or through major cloud provider APIs (Google, AWS, Microsoft) with built-in features like diarization and streaming.

- Language-Adaptive Encoders: Shares powerful speech representations (like wav2vec 2.0 and Conformer) across all languages, allowing low-resource languages to benefit from the data-richness of high-resource ones.

How to Use

- Choose a Deployment Method: Decide whether to use a pre-packaged cloud API (e.g., Google Speech-to-Text, AWS Transcribe) or to self-host an open-source model like Whisper or MMS for greater control.

- Prepare Your Audio: For best results, ensure your audio is clear and has minimal background noise. The system can handle mixed-language audio, but standard audio formats (like WAV, MP3, FLAC) are recommended.

- Initiate the Transcription Request: Send your audio file to the API endpoint or the self-hosted model. If the language is unknown, the integrated Language ID will automatically detect it.

- Specify Task (Transcribe or Translate): Many Omnilingual ASR systems are multitask. Specify whether you want a direct transcription in the source language or a translation into a target language (e.g., English).

- Process the Output: The model will return a structured text output, often including timestamps, confidence scores, and speaker labels (diarization) if supported by the specific implementation.

- Fine-Tune for Niche Domains (Optional): For specialized vocabularies (e.g., medical, legal), you can fine-tune the open-source models with your own data to improve accuracy on specific terms.

Use Cases

- Global Content Captioning: Automatically generate accurate subtitles and captions for videos, podcasts, and live streams in hundreds of languages, making content accessible to a worldwide audience.

- Multilingual Customer Support Analytics: Transcribe and analyze customer service calls from around the globe in their native languages to identify trends, monitor agent performance, and improve customer satisfaction without needing separate systems for each region.

- Voice-Enabled AI Assistants: Build truly multilingual virtual assistants and voice control systems that can understand and respond to users in their preferred language, from Spanish and Mandarin to Amharic and Cebuano.

- Language Preservation and Documentation: Empower linguists and community members to rapidly transcribe and document oral histories, conversations, and cultural heritage in low-resource and endangered languages.

FAQ

What is Omnilingual ASR?

Omnilingual ASR is a type of AI system that performs automatic speech recognition (ASR) for a massive number of languages (1,600+) using a single, unified model. It combines advanced encoders and LLM-powered decoders to understand and transcribe speech without needing separate models for each language.

How is this different from traditional ASR systems?

Traditional ASR systems are typically trained for a single language. Supporting multiple languages required training, managing, and deploying separate models, which is expensive and inefficient. Omnilingual ASR uses a language-agnostic approach, sharing learning across languages to reduce costs and improve performance on low-resource tongues.

Can it handle audio with multiple languages mixed together?

Yes. Many Omnilingual ASR models, such as Meta's MMS and OpenAI's Whisper, have integrated Language Identification (LID). They can detect which language is being spoken, often on a segment-by-segment basis, and transcribe it accordingly.

How accurate is it for low-resource languages?

Accuracy has improved dramatically. Thanks to self-supervised learning and balanced training techniques, models like Meta's MMS have halved the Word Error Rate (WER) on the FLEURS benchmark. While still trailing high-resource languages, the gap is narrowing, with many supported languages achieving a Character Error Rate (CER) below 10%.

Is Omnilingual ASR open source?

Several foundational Omnilingual ASR models are available as open-source checkpoints. This includes OpenAI's Whisper, Meta's Massively Multilingual Speech (MMS), and the upcoming OmniASR suite (Apache-2.0). This allows developers to self-host and customize the models.

How can I add a language that isn't supported?

A key feature is few-shot extensibility. Using just a handful of audio recordings, you can fine-tune or prompt the model to recognize a new, previously unsupported language, making it possible for communities to add their own languages.

Can it do more than just transcribe?

Yes. The decoders are often multitask. They can perform direct transcription (speech-to-text) in the original language or translate the speech into a different target language (speech-to-text-translation) like English.